FreeRTOS

Multitasking in Small Embedded Systems

- Most embedded real-time applications include a mix of both hard and soft real-time requirements.

- Soft real-time requirements

- State a time deadline, but breaching the deadline would not render the system useless.

- E.g., responding to keystrokes too slowly

- Hard real-time requirements

- State a time deadline, but breaching the deadline would result in absolute failure of the system.

- E.g., a driver’s airbag would be useless if it responded to crash event too slowly.

FreeRTOS

- FreeRTOS is a real-time kernel/scheduler on top of which MCU applications can be built to meet their hard real-time requirements.

- Allows MCU applications be organized as a collection of independent threads of execution.

- Decides which thread should be executed by examining the priority assigned to each thread.

- Assume a single core MCU, where only a single thread can be executing at one time.

The simplest case of task priority assignments

- Assign higher priorities (lower priorities) to threads that implement hard real-time (soft real-time) requirements

- As a result, hard real-time threads are always executed ahead of soft real-time threads.

- But, priority assignment decision are not always that simple.

- In general, task prioritization can help ensure an application meet its processing deadline.

- In FreeRTOS, each thread of execution is called a ‘task’, a simple C function, taking a void* parameter and returning nothing (void).

Why use a real-time kernel

- For a simple system, many well-established techniques can provide an appropriate solution without the use of a kernel.

- For a more complex embedded application, a kernel would be preferable.

- But where the crossover point occurs will always be subjective.

- Besides ensuring an application meets its processing deadline, a kernel can bring other less obvious benefits.

Benefits of using real-time kernel

- Abstracting away timing information

- Kernel is responsible for execution timing and provides a time-related API to the application. This allows the application code to be simpler and the overall code size be smaller.

- Maintainability/Extensibility

- Abstracting away timing details results in fewer interdependencies between modules and allows sw to evolve in a predictable way.

- Application performance is less susceptible to changes in the underlying hardware.

- Modularity

- Tasks are independent modules, each of which has a well-defined purpose.

- Team development

- Tasks have well-defined interfaces, allowing easier development by teams

- Easier testing

- Tasks are independent modules with clean interfaces, they can be tested in isolation.

- Idle time utilization

- The idle task is created automatically when the kernel is started. It executes whenever there are no application tasks to run.

- Be used to measure spare processing capacity, perform background checks, or simply place the process into a low-power mode.

- Flexible interrupt handling

- Interrupt handlers can be kept very short by deferring most of the required processing to handler tasks.

Standard FreeRTOS features

- Pre-emptive or co-operative operation

- Very flexible task priority assignment

- Queues

- Binary/Counting / Recursive semaphores

- Mutexes

- Tick/Idle hook functions

- Stack overflow checking

- Trace hook macros

- Interrupt nesting

1. Task management

1.1 Task scope

- Main topics to be covered:

- How FreeRTOS allocates processing time to each task within an application

- How FreeRTOS chooses which task should execute at any given time

- How the relative priority of each task affects system behavior

- The states that a task can exist in.

- More specific topics:

- How to implement tasks

- How to create one or more instances of a task

- How to use the task parameter

- How to change the priority of a task that has already been created.

- How to delete a task.

- How to implement periodic processing.

- When the idle task will execute and how it can be used.

1.2 Task functions

- Tasks are implemented as C functions.

- Special: Its prototype must return

voidand take avoidpointer parameter as the following:

void ATaskFunction (void *pvParameters);

- Each task is a small program in its own right.

- Has an entry point

- Normally runs forever within an infinite loop

- Does not exit

- Special features of a FreeRTOS task function

- Must not contain a

returnstatement - Must not be allowed to execute past the end of the function

- If a task is no longer required, it should be explicitly deleted.

- Be used to create any number of tasks

- Each created task is a separate execution instance with its own stack, and its own copy of any automatic variables defined within the task itself.

ATaskFunction

void ATaskFunction( void *pvParameters ) { /* Variables can be declared just as per a normal function. Each instance of a task created using this function will have its own copy of the iVariableExample variable. This would not be true if the variable was declared static - in which case only one copy of the variable would exist and this copy would be shared by each created instance of the task. */ int iVariableExample = 0; /* A task will normally be implemented as in infinite loop. */ for( ;; ) { /* The code to implement the task functionality will go here. */ } /* Should the task implementation ever break out of the above loop then the task must be deleted before reaching the end of this function. The NULL parameter passed to the vTaskDelete() function indicates that the task to be deleted is the calling (this) task. */ vTaskDelete( NULL ); }

1.3 Top level task states

- A task can exist in one of two states: Running and Not Running

- Running state: the processor is executing its code.

- Not Running state: the task is dormant, its status having been saved ready for resuming execution the next time

- Scheduler is the only entity that can switch a task in and out a running state.

1.4 Creating Tasks

xTaskCreate()API function- the most fundamental component in a multitasking system

- Probably the most complex of all API functions

portBASE_TYPE xTaskCreate ( pdTASK_CODE pvTaskCode, const signed portCHAR *const pcName, unsigned portSHORT usStackDepth, void *pvParameters, unsigned portBASE_TYPE uxPriority, xTaskHandle *pxCreatedTask );

All parameters

pvTaskCode- a pointer to the function (just the function name) that implements the task.

pcName- A descriptive name for the task. Not used by FreeRTOS, but aids debugging .

configMAX_TASK_NAME_LEN: constant that defines the maximum length a task name can task including the NULL terminator.

usStackDepth- Each task has its own unique stack that is allocated by the kernel to the task when the task is created.

- The value specifies the number of words the task stack can hold.

- Size of the stack used by the idle task is defined by

configMINIMAL_STACK_SIZE

pvParameters- The value assigned to

pvParameterswill be the values passed into the task.

uxPriority- defines the priority at which the task will execute.

- Priorities can be assigned from 0, which is the lowest priority, to

(configMAX_PRIOIRTIES-1), which is the highest priority. - Passing a value above

(configMAX_PRIOIRTIES -1)will result in the priority being capped the maximum legitimate value.

pxCreatedTask- pass out a handle to the created task, then be used to refer the created task in API calls.

- E.g., change the task priority or delete the task

- Be set to NULL if no use for the task handle

- Two possible return values

pdTRUE: task has been created successfully.errCOULD_NOT_ALLOCATE_REQUIRED_MEMORY- Task has not been created as there is insufficient heap memory available for FreeRTOS to allocate enough RAM to hold the task data structures and stack.

Example 1 - Creating tasks

- To demonstrate the steps of creating two tasks then starting the tasks executing.

- Tasks simply print out a string periodically, using a crude null loop to create the periodic delay.

- Both tasks are created at the same priority and are identical except for the string they print out.

-

- Both tasks are rapidly entering and exiting the Running state.

- Only one task can exist in the Running state at any one time.

Example 2 - Using the task parameter

- The two tasks in Example 1 are almost identical, the only difference between them being the text string they print out.

- Remove such duplication by creating two instances of a single task implementation

vTaskFunctionusing the task parameter

- First define a char string

pcTextForTask1:

static const char *pcTextForTask1 = "Task 1 is running.\n";

- Then, in

main(void)function, change to:

xTaskCreate( vTaskFunction, "Task 1", 240, (void*)pcTextForTask1, 1, NULL );

- Create a task function:

vTaskFunction(void * pvParameters) char *pcTaskName; pcTaskName = (char *) pvParameters;

1.5 Task priorities

uxPriorityparameter ofxTaskCreate()assigns an initial priority to the task being created.- It can be changed after the scheduler has been started by using

vTaskPrioritySet()API function

configMAX_RPIORITIESin"FreeRTOSConfig.h"- Maximum number of priorities

- Higher this value, more RAM consumed

- Range:

[0(low), configMAX-PRIORITIES-1(high)] - Any number of tasks can share the same priority

- To select the next task to run, the scheduler itself must execute at the end of each time slice.

- Use a periodic interrupt called the tick (interrupt).

- Effectively set the length of time slice by the tick interrupt frequency –

configTICK_RATE_HZin"FreeRTOSConfig.h"

configTICK_RATE_HZ- If it is 100 (Hz), the time slice will be 10 ms.

- API always calls specify time in tick interrupts (ticks)

portTICK_RATE_MS- Convert time delays from milliseconds into the number of tick interrupts.

- When kernel itself is running, the arrows in the above figure show the sequence of execution from task interrupt, then from interrupt back to a next task.

Example 3 - Experimenting with priorities

- Scheduler always ensures that the highest priority task is able to run is the task selected to enter the Running state.

- In Example 1 and 2, two tasks created at the same priority, so both entered and exited the Running state in turn. In this example, second task is set at priority 2.

- In

main()function, change:

xTaskCreate( vTaskFunction, "Task 2", 240, (void*)pcTextForTask2, 1, NULL );

To

xTaskCreate( vTaskFunction, "Task 2", 240, (void*)pcTextForTask2, 2, NULL );

- The scheduler always selects the highest priority task that is able to run.

- Task 2 has a higher priority than Task 1; so Task 2 is the only task to ever enter the Running state.

- Task 1 is to be ‘starved’ of processing time of Task 2.

‘Continuous processing’ task

- So far, the created tasks always have work to perform and have never had to wait for anything

- Always able to enter the Running state.

- This type of task has limited usefulness as they can only be created at the very lowest priority.

- If they run at any other priority, they will tasks of lower priority ever running at all.

- Solution: Event-driven tasks

1.6 Expanding the ‘Not Running’ state

- An event-driven task

- has work to perform only after the occurrence of the event that triggers it

- Is not able enter the Running state before that event has occurred.

- The scheduler selects the highest priority task that is able to run.

- High priority tasks not being able to run means that the scheduler cannot select them, and

- Must select a lower priority task that is able to run.

- Using event-driven tasks means that

- tasks can be created at different priorities without the highest priority tasks starving all the lower priority tasks.

Full task state machine

Blocked state

- Tasks enter this state to wait for two types of events

- Temporal (time-related) events: the event being either a delay expiring, or an absolute time being reached.

- A task enter the Blocked state to wait for 10ms to pass

- Synchronization events: where the events originate from another task or interrupt

- A task enter the Blocked state to wait for data to arrive on a queue.

- Can block on a synchronization event with a timeout, effectively block on both types of event simultaneously.

- A task waits for a maximum of 10ms for data to arrive on a queue. It leaves the Blocked state if either data arrives within 10ms or 10ms pass with no data arriving.

Suspended state

- Tasks in this state are not available to the scheduler.

- The only way into this state is through a call to the

vTaskSuspend()API function - The only way out this state is through a call to the

vTaskResume()orvTaskResumeFromISR()API functions

Ready state

- Tasks that are in the ‘Not Running’ state but are not Blocked or Suspended are said to be in the Ready state.

- They are able to run, and therefore ‘ready’ to run, but are not currently in the Running state.

Example 4 - Creating a delay

- All tasks in the previous examples have been periodic

- They have delayed for a period and printed out their string before delay once more, and so on.

- Delayed generated using a null loop

- the task effectively polled an incrementing loop counter until it reached a fixed value.

- Disadvantages to any form of polling

- While executing the null loop, the task remains in the Ready state, ‘starving’ the other task of any processing time.

- During polling, the task does not really have any work to do, but it still uses maximum processing time and so wastes processor cycles.

- This example corrects this behavior by

- replacing the polling null loop with a call to

vTaskDelay()API function. - setting

INCLUDE_vTaskDelayto 1 in"FreeRTOSConfig.h"

vTaskDelay() API function

- Place the calling task into the Blocked state for a fixed number of tick interrupts.

- The Blocked state task does not use any processing time, so processing time is consumed only when there is work to be done.

void vTaskDelay(portTickType xTicksToDelay);

xTicksToDelay: the number of ticks that the calling task should remain in the Blocked state before being transitioned back into the Ready state.- E.g, if a task called

vTaskDelay(100)while the tick count was 10,000, it enters the Blocked state immediately and remains there until the tick count is 10,100.

- In

void vTaskFunction(void *pvParameters)- Change a NULL loop:

for( ul = 0; ul < mainDELAY_LOOP_COUNT; ul++ ) { }

- To:

vTaskDelay(250 / portTICK_RATE_MS); /* a period of 250ms is being specified. */

-

- Although two tasks are being created at different priorities, both will now run.

- Each time the tasks leave the Blocked state they execute for a fraction of a tick period before re-entering the Blocked state.

- Most of the time no application tasks are able to run and, so, no tasks can be selected to enter the Running state.

- The idle task will run to ensure there is always at least one task that is able to run.

- Bold lines indicate the state transitions performed by the tasks in Example 4

vTaskDelayUntil() API Function

- Parameters to

vTaskDelayUntil()- specify the exact tick count value at which the calling task should be moved from the Blocked state into the Ready state.

- Be used when a fixed execution period is required.

- The time at which the calling task is unblocked is absolute, rather than relative to when the function was called (as

vTaskDelay())

void vTaskDelayUntil( portTickType *pxPreviousWakeTime, portTickType xTimeIncrement);

pxPreviousWakeTime- Assume that

vTaskDelayUtil()is being used to implement a task that executes periodically and with a fixed frequency. - Holds the time at which the task left the Blocked state.

- Be used as a reference point to compute the time at which the task next leaves the Blocked state.

- The variable pointed by

pxPreviousWakeTimeis updated automatically, not be modified by application code, other than when the variable is first initialized.

xTimeIncrement- Assume that

vTaskDelayUtil()is being used to implement a task that executes periodically and with a fixed frequency – set byxTimeIncrement - Be specified in ‘ticks’. The constant

portTICK_RATE_MScan be used to convert ms to ticks.

Example 5 - Converting the example tasks to use vTaskDelayUntil()

- Two tasks created in Example 4 are periodic tasks.

vTaskDelay()does not ensure that the frequency at which they run is fixed,- as the time at which the tasks leave the Blocked state is relative to when they call

vTaskDelay()

- In

void vTaskFunction(void *pvParameters)change:

vTaskDelay(250 / portTICK_RATE_MS); /* a period of 250ms is being specified. */

-

- to:

vTaskDelayUntil(&xLastWakeTime,(vTaskDelay(250 / portTICK_RATE_MS)); /* xLastWakeTime init with current tickcount */ xLastWakeTime = xTaskGetTickCount(); /* Updated within vTaskDelayUntil() automatically */

Example 6 - Blocking and non-blocking tasks

- Two tasks are created at priority 1.

- Always in Ready or Running state, no API function calls.

- Continuous processing tasks: they always have work to do.

- A Third task is created at priority 2.

- Periodically prints out a string by using

vTaskDelayUntil()to place itself into the Blocked state between each print iteration.

void vContinuousProcessingTask(void * pvParameters) { char *pcTaskName; pcTaskName = (char *) pvParameters; for (;;) { vPrintString(pcTaskName); for( ul = 0; ul < 0xfff; ul++ ) { } }} void vPeriodicTask(void * pvParameters) { portTickType xLastWakeTime; xLastWakeTime = xTaskGetTickCount(); for (;;){ vPrintString("Periodic task is running\n"); vTaskDelayUntil(&xLastWakeTime,(10/portTICK_RATE_MS)); }}

1.7 Idle task

- An idle task is automatically created by the scheduler when

vTaskStartScheduler()is called.- Does very little more than site in a loop

- Has the lowest possible priority (zero), so a higher priority application task will enter in the Running state

- Be transitioned out of the Running state as soon as a higher priority task enters the Ready state

Idle Task Hook Functions

- Add application specific functionality directly into the idle task by the use of an idle hook

- A function called automatically by the idle task once per iteration of the idle task loop

- Common uses for the Idle task hook

- Executing low priority, background, or continuous processing

- Measuring the amount of spare processing capacity

- Placing the processor into a low power mode

- Rules idle task hook functions must adhere to

- Must never attempt to block or suspend.

- If the application uses

vTaskDelete(), the Idle task hook must always return to its caller within a reasonable time period.- Idle task is responsible for cleaning up kernel resources after a task has been deleted.

- If the idle task remains permanently in the Idle hook function, this clean-up cannot occur.

- Idle task hook functions have the name and prototype as:

void vApplicationIdleHook(void);

Example 7 Defining an idle task hook function

- Set

configUSE_IDLE_HOOKto 1 - Add following function:

unsigned long ulIdleCycleCount = 0UL; /* must be called this name, take no parameters and return void. */ void vApplicationIdleHook (void) { ulIdleCycleCount++; }

- In

vTaskFunction(), change

vPrintString(pcTaskName)

*

- To:

vPrintStringAndNumber(pcTaskName, ulIdleCycleCount);

Idle task and Idle Task Hook

- Has the lowest priority, possible to add functionality into the idle task vi idle hook

- Execute continuous processing

- Measuring spare processing capacity

- Placing the processor into a low power mode

- Two rules

- Never block or suspend

- Must return to its caller within a reasonable time period, as it needs to clean up kernel resources after a deleted task.

1.8 Scheduling

vTaskPrioritySet(xTaskHandle pxTask, unsigned portBASE_TYPE uxNewPriority);

- Change priority of any task after scheduler started.

- Available if

INCLUDE_vTaskPrioritySetis set 1.pxTask: handle of the task. A task can change its own priority by passing NULL in place of a valid task handle.uxNewPriority: priority to be set.

unsigned portBASE_TYPE uxTaskPriorityGet (xTaskHandle pxTask);

- Query priority of a task

- Available if

INCLUDE_vTaskPriorityGetis set 1pxTask: handle of the task. A task can query its own priority by passing NULL in place of a valid task handle.- Returned value: priority currently assigned to task queried

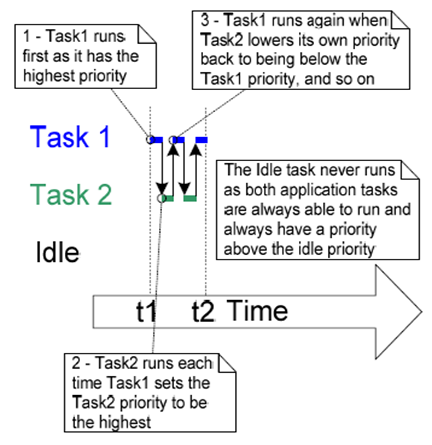

Example 8 - Changing task priorities

- Demonstrate the scheduler always selects the highest Ready state task to run

- by using the

vTaskPrioritySet()API function to change the priority of two tasks relative to each other.

- Two tasks are created at two different priorities.

- Neither task makes any API function calls that cause it to enter the Blocked state,

- So both are in either Ready or Running state.

- So the task with highest priority will always be the task selected by the scheduler to be in Running state

- Expected Behavior of Example 8:

- Task 1 is created with the highest priority to be guaranteed to run first. Task 1 prints out a couple of strings before raising the priority of Task 2 to above its own priority.

- Task2 starts to run as it has the highest relative priority.

- Task 2 prints out a message before setting its own priority back to below that of Task 1.

- Task 1 is once again the highest priority task, so it starts to run and forcing Task 2 back into the Ready state.

- Declare a global variable to hold the handle of Task 2.

xTaskHandle xTask2Handle;

- In

main()function, create two tasks:

xTaskCreate(vTask1, "Task 1", 240, NULL, 2, NULL); xTaskCreate(vTask2, "Task 2", 240, NULL, 1, &xTask2Handle);

- Change vTask1 by initialization

unsigned portBASE_TYPE uxPriority; uxPriority = uxTaskPriorityGet(NULL);

- And adding to the infinite loop

vTaskPrioritySet(xTaskHandl1, (uxPriority+1));

- Change vTask2 by initialization

unsigned portBASE_TYPE uxPriority; uxPriority = uxTaskPriorityGet(NULL);

- And adding to the infinite loop

vTaskPrioritySet(NULL, (uxPriority-2));

- Task execution sequence:

1.9 Deleting tasks

- Deleted tasks no longer exist and cannot enter the Running state again.

- Idle task is responsible to automatically free memory allocated by kernel to tasks that have been deleted.

- Remember if applications use

vTaskDelete(), do not completely starve the idle task of all processing time.

- Note: any memory or other resource that the application task allocates itself must by be freed explicitly by the application code.

vTaskDelete()API function- Function prototype

void vTaskDelete(xTaskHandle pxTaskToDelete);

pxTaskToDelete: Handle of the task that is to be deleted. A task can delete itself by passing NULL in place of valid task handle.- Available only when

INCLUDE_vTaskDeleteset 1

Example 9 - Deleting tasks

- Task 1 is created by

main()with priority 1. When it runs, it creates Task 2 at priority 2. Task 2 as the highest priority task starts to execute immediately. - Task 2 does nothing but delete itself by passing NULL or its own task handle.

- When Task 2 has been deleted, Task 1 is again the highest priority task, so continues executing – at which point it calls

vTaskDelay()to block for a short period. - The idle task executes while Task 1 is in the blocked state and frees the memory that was allocated to the now deleted Task 2.

- When Task 1 leaves the blocked state it again becomes the highest priority Ready state task and preempts the Idle task. Then, start from Step1 again.

Memory Management

- Does not permit memory to be freed once it has been allocated.

- Subdivide a single array into smaller blocks. Total size of the array (heap) is set by

configTOTAL_HEAP_SIZE xPortGetFreeHeapSize()returns the total amount of heap space that remains unallocated."Heap_2.c"- Allow previously allocated blocks to be freed.

- Does not combine adjacent free blocks into a single large block.

Summary - 1. Prioritized pre-emptive scheduling

- Examples illustrate how and when FreeRTOS selects which task should be in the Running state.

- Each task is assigned a priority.

- Each task can exist in one of several states.

- Only one task can exist in the Running state at any one time.

- The scheduler always selects the highest priority Ready state task to enter the Running state.

- Fixed priority

- Each task is assigned a priority that is not altered by the kernel itself (only tasks can change priorities)

- Pre-emptive

- A task entering the Ready state or having its priority altered will always pre-empt the Running state task, if the Running state task has a lower priority.

- Tasks can wait in the Blocked state for an event and are automatically moved back to the Ready state when the event occurs.

- Temporal events

- Occur at a particular time, e.g. a block time expires.

- Generally be used to implement periodic or timeout behavior.

- Synchronization events

- Occur when a task or ISR sends info to a queue or to one of the many types of semaphore.

- Generally be used to signal asynchronous activity, such as data arriving at a peripheral.

Summary - 2. Selecting Task Priorities

- Task that implement hard real-time functions are assigned priorities above those that implement soft real-time functions.

- Must also take execution times and processor utilization into account to ensure the entire application will never miss a hard real-time deadline.

- Rate monotonic scheduling (RMS)

- A common priority assignment technique which assigns each task a unique priority according to tasks periodic execution rate.

- Highest priority is assigned to the task that has the highest frequency of periodic execution.

- Lowest priority is assigned to the task that has the lowest frequency of periodic execution.

- Can maximize the schedulability of the entire application.

- But runtime variations, and the fact that not all tasks are in any way periodic, make absolute calculations a complex process.

Summary - 3. Co-operative scheduling

- In a pure co-operative scheduler, a context switch occur only when:

- the Running state task enters the Blocked state

- or, the Running state task explicitly calls

taskYIELD()

- Tasks will never be pre-empted and tasks of equal priority will not automatically share processing time.

- Results in a less responsive system.

- A hybrid scheme, it is possible that ISRs are used to explicitly cause a context switch. It:

- allows synchronization events to cause pre-emption, but not temporal events.

- results in a pre-emptive system without time slicing.

- is desirable due to its efficiency gains and is a common scheduler configuration.

2. Queue management

2.1 Queue Management Scope

- FreeRTOS applications are structured as a set of independent tasks

- Each task is effectively a mini program in its own right.

- It will have to communicate with each other to collectively provide useful system functionality.

- Queue is the underlying primitive

- Be used for communication and synchronization mechanisms in FreeRTOS.

- Scope

- How to create a queue

- How a queue manages the data it contains

- How to send data to a queue

- How to receive data from a queue

- What it means to block on a queue

- The effect of task priorities when writing to and reading from a queue

2.2 Queue Characteristics – data storage

- A queue can hold a finite number of fixed size data items.

- Normally, used as FIFO buffers where data is written to the end of the queue and removed from.

- Also possible to write to the front of a queue.

- Writing data to a queue causes a byte-for-byte copy of the data to be stored in the queue itself.

- Reading data from a queue causes the copy of the data to be removed from the queue.

- Queues are objects in their own right

- Not owned by or assigned to any particular task

- Any number of tasks can write to the same queue and any number of tasks can read from the * same queue.

- Very common to have multiple writers, but very rare to have multiple readers.

Blocking on Queue Reads

- A task can optionally specify a ‘block’ time

- The maximum time that the task should be kept in the Blocked state to wait for data to be available from the queue.

- It is automatically moved to the Ready state when another task or interrupt places data into the queue.

- It will also be moved automatically from the Blocked state to the Ready state if the specified block time expires before data becomes available.

- Only one task will be unblocked when data becomes available.

- Queue can have multiple readers.

- So, it is possible for a single queue to have more than one task blocked on it waiting for data.

- The task that is unblocked will always be the highest priority task that is waiting for data.

- If the blocked tasks have equal priority, the task that has been waiting for data the longest will be unblocked.

Blocking on Queue Writes

- A task can optionally specify a ‘block’ time when writing to a queue.

- The maximum time that task should be held in the Blocked state to wait for space to be available on the queue.

- Queue can have multiple writers.

- It is possible for a full queue to have more than one task blocked on it waiting to complete a send operation.

- Only one task will be unblocked when space on the queue becomes available.

- The task that is unblocked will always be the highest priority task that is waiting for space.

- If the blocked tasks have equal priority, the task that has been waiting for space the longest will be unblocked.

2.3 Using a Queue

- A queue must be explicitly created before it can be used.

- FreeRTOS allocates RAM from the heap when a queue is created.

- RAM holds both the queue data structure and the items that are contained in the queue.

xQueueCreate()API Function- Be used to create a queue and returns an

xQueueHandleto reference the queue it creates.

- Function Prototype:

xQueueHandle xQueueCreate( unsigned portBASE_TYPE uxQueueLength, unsigned portBASE_TYPE uxItemSize);

xQueueLength: the maximum number of items that the queue being created can hold at any one time.uxItemSize: the size in bytes of each data item that can be stored in the queue.- Return Value:

- if NULL is returned, the queue cannot be created as there is insufficient heap memory available for FreeRTOS to allocate the queue data structures and storage.

- A non-NULL value returned indicates that the queue has been created successfully. It should be stored as the handle to the created queue.

xQueueSendToBack() and xQueueSendToFront() API Functions

xQueueSendToBack()- Be equivalent to

xQueueSend() - Be used to send data to the back(tail) of a queue

xQueueSendToFront()- Be used to send data to the front (head) of a queue

- Never call these two API functions from ISR!

- Function prototypes

portBASE_TYPE xQueueSendToBack ( xQueueHandle xQueue, const void *pvItemToQueue, portTickType xTicksToWait);

portBASE_TYPE xQueueSendToFront ( xQueueHandle xQueue, const void *pvItemToQueue, portTickType xTicksToWait);

xQueue: The handle of the queue to which the data is being send (written). It will have been returned from the call toxQueueCreate()used to create the queue.pvItemToQueue: a pointer to the data to be copied into the queue.- The size of each item that the queue can hold is set when the queue is created, so the data will be copied from

pvItemQueueinto the queue storage area. xTicksToWait: the maximum amount of time the task should remain in the Blocked state to wait for the space to become available on the queue, should the queue already be full.- if

xTicksToWaitis zero, both APIs will return immediately in case the queue is already full. - The block time is specified in tick periods, so the absolute time it represents is dependent on the tick frequency. The constant

portTICK_RATE_MScan be used to convert tickes into a time specified in MS.

- Returned value: two possible return values.

pdPASSwill be returned if data was successfully sent to the queue.- If a block time was specified, it is possible that the calling task was placed in the Blocked state to wait for another task or interrupt to make room in the queue, before the function returned,

- Data was successfully written to the queue before the block time expired.

errQUEUE_FULLwill be returned if data could not be written to the queue as the queue was already full.- In a similar scenario that a block time was specified, but it expired before space becomes available in the queue.

xQueueReceive() and xQueuePeek() API Functions

xQueueReceive()- Receive (consume) an item from a queue.

- The item received is removed from the queue.

xQueuePeek()- Receive an item from the queue without removing.

- Receives the item from the head of the queue.

- Never call these two API functions from an ISR!

- Function prototypes:

portBASE_TYPE xQueueReceive ( xQueueHandle xQueue, const void *pvBuffer, portTickType xTicksToWait);

portBASE_TYPE xQueuePeek( xQueueHandle xQueue, const void *pvBuffer, portTickType xTicksToWait);

-

xQueue: Handle of the queue from which data received (read). It will have been returned from the call toxQueueCreate()pvBuffer: A memory pointer where received data will be copied.xTicksToWait: Maximum amount of time the task should remain in the Blocked state waiting data available on queue, should the queue already be empty.- If

xTicksToWaitis zero, both APIs will return immediately in case the queue is already empty. - Absolute time it represents is dependent on the tick frequency. The constant

portTICK_RATE_MScan be used to convert a time specified in MS into ticks.

- Returned value: two possible return values.

pdPASSon successfully read from the queue.- data was successfully read from the queue before the block time expired.

errQUEUE_EMPTYif data could not be read from the queue as the queue was already empty.- block time was not zero, but it expired before data was sent.

uxQueueMessageWaiting() API Function

- Be used to query the number of items that are currently in a queue.

- Prototype:

unsigned portBASE_TYPE uxQueueMEssagesWaiting ( xQueueHandle xQueue);

- Returned value: the number of items that the queue being queried is currently holding. If zero is returned, the queue is empty.

Example 10 - Blocking receiving from queue

- To demonstrate

- a queue being created,

- Hold data items of type long

- data being sent to the queue from multiple tasks,

- Sending tasks do not specify a block time, lower priority than receiving task.

- and data being received from the queue

- Receiving task specifies a block time 100ms

- So, queue never contains more than one item

- Once data is sent to the queue, the receiving task will unblock, pre-empt the sending tasks, and remove the data – leaving the queue empty once again.

vSenderTask()does not specify a block time.- continuously writing to the queue

xStatus = xQueueSendToBack(xQueue,pvParameters,0); if (xStatus != pdPASS) { vPrintString("Could not send to the queue.\n"); } taskYIELD();

vReceiverTask()specifies a block time 100ms.- Enter the Blocked state to wait for data to be available, leaves it when either data is available on the queue, or 100ms expires, which should never occur.

xStatus = xQueueReceive(xQueue,&xReceivedValue,100/portTICK_RATE_MS); if (xStatus == pdPASS) { /* print the data received */ }

- Execution sequence

Using Queues to transfer compound types

- It is common for a task to receive data from multiple sources on a single queue.

- Receiver needs to know the data source to allow it to determine how to process the data.

- Use the queue to transfer structures which contain both data value and data source, like

typedef struct { int iValue; // a data value int iMeaning; // a code indicating data source } xData;

- Controller task performs the primary system function.

- React to inputs and changes to the system state communicated to it on the queue.

- A CAN bus task encapsulates the CAN bus interfacing functionality, like the actual motor speed value.

- A HMI task encapsulates all the HMI functionality, like the actual new set point value.

Example 11 - Queues with registers and blocked time

- Two differences from Example 10

- Receiving task has a lower priority than the sending tasks

- The queue is used to pass structures, rather than simple long integers between the tasks.

- The Queue will normally be full because

- Once the receiving task removes an item from the queue, it is pre-empted by one of the sending tasks which then immediately refills the queue.

- Then sending tasks re-enters the Blocked state to wait for space to become available on the queue again.

- In

vSenderTask(), the sending task specifies a block time of 100ms.- So, it enters the Blocked state to wait for space to become available each time the queue becomes full.

- It leaves the Blocked state when either the space is available on the queue or 100ms expires without space be available (should never expire as receiving task is continuously removing items from the queue)

xStatus = xQueueSendToBack(xQueue,pvParameters,100/portTICK_RATE_MS); if (xStatus != pdPASS) { vPrintString("Could not send to the queue.\n"); } taskYIELD();

vReceiverTask()will run only when both sending tasks are in the Blocked state.- Sending tasks will enter the Blocked state only when the queue is full as they have higher priorities.

- The receiving task will execute only when the queue is already full. → it always expects to receive data even without a ‘block’ time.

xStatus = xQueueReceive(xQueue,&xReceivedStructure,0); if (xStatus == pdPASS) { /* print the data received */ }

- Execution sequence - Sender 1 and 2 have higher priorities than Receiver

2.4 Working with large data

- It is not efficient to copy the data itself into and out of the queue byte by byte, when the size of the data being stored in the queue is large.

- It is preferable to use the queue to transfer points to the data.

- More efficient in both processing time and the amount of RAM required to create the queue.

- But, when queuing pointers, extreme care must be taken.

- The owner of the RAM being pointed to is clearly defined.

- When multiple tasks share memory via a pointer, they do not modify its contents simultaneously, or take any other action that cause the memory contents invalid or inconsistent.

- Ideally, only the sending task is permitted to access the memory until a pointer to the memory has been queued, and only the receiving task is permitted to access the memory after the pointer has been

- The RAM being pointed to remains valid.

- If the memory being pointed to was allocated dynamically, exactly one task be responsible for freeing the memory.

- No task should attempt to access the memory after it has been freed.

- A pointer should never be used to access data that has been allocated on a task stack. The data will not be valid after the stack frame has changed.

3. Resources management

3.1 Binary semaphores

* Binary semaphores are the simplest effective way to synchronize tasks, an other even more simple, but not as effective, consists in polling an input or a resource. A binary semaphore can be seen as a queue which contains only one element.

- Creating a semaphore

void vSemaphoreCreateBinary( xSemaphoreHandle xSemaphore );

-

xSemaphore: semaphore to be created.

- Taking a semaphore

-

- This operation is equivalent to a P() operation, or if compared to queues, to a Receive() operation. A task taking the semaphore must wait it to be available and is blocked until it is or until a delay is elapsed (if applicable).

portBASE_TYPE xSemaphoreTake( xSemaphoreHandle xSemaphore, portTickType xTicksToWait );

-

xSsemaphoreis the semaphore to take.xTicksToWaitis the time, in clock ticks, for the task to wait before it gives up with taking the semaphore. IfxTicksToWaitequalsMAX_DELAYandINCLUDE_vTaskSuspendis 1, then the task won’t stop waiting.- If the take operation succeed in time, the function returns

pdPASS. If not,pdFALSEis returned.

- Giving a semaphore

- Giving a semaphore can be compared to a V() operation or to writing on a queue.

portBASE_TYPE xSemaphoreGive( xSemaphoreHandle xSemaphore );

-

xSemaphoreis the semaphore to be given.- The function returns

pdPASSif the give operation was successful, orpdFAILif the semaphore was already available, or if the task did not hold it.

3.2 Mutexes

- Mutexes are designed to prevent mutual exclusion or deadlocking.

- A mutex is used similarly to a binary semaphore, except the task which take the semaphore must give it back.

- This can be though with a token associated with the resource to access to.

- A task holds the token, works with the resource then gives back the token; in the meanwhile, no other token can be given to the mutex.

- Priority inheritance

- Priority inheritance is actually the only difference between a binary semaphore and a mutex.

- When several tasks asks for a mutex, the mutex holder’s priority is set to the highest waiting task priority.

- This mechanism helps against priority inversion phenomenon although it doesn’t absolutely prevent it from happening.

- The use of a mutex raises the application global complexity and therefore should be avoided whenever it is possible.

3.3 Counting semaphore routines

- Creation

- A counting semaphore can be taken a limited maximum times and is initialized to be available for an arbitrary number of take operations. These characteristics are given when the semaphore is created.

xSemaphoreHandle xSemaphoreCreateCounting( unsigned portBASE_TYPE uxMaxCount, unsigned portBASE_TYPE uxInitialCount );

-

uxMaxCountis the capacity of the counting semaphore, its maximum ability to be taken.uxInitialCountis the new semaphore’s availability after it is created.- Returned value is NULL if the semaphore was not created, because of a lack of memory, or a pointer to the new semaphore and can be used to handle it.

4. Interrupts

4.1 Handling interrupts

- Assuming interrupt is a mechanism fully implemented and handled by hardware, FreeRTOS tasks or kernel can only give methods to handle a given interrupt, or it can raise some by calling an hardware instruction.

- Supposing a micro controller with 7 different levels of interrupts, the more interrupt number is important, it will be priority over other interrupts. Not always the case. interrupts priorities are not, in any case, related to tasks priorities, and will always preempt them.

- A function defined as an interrupt handler cannot use freely FreeRTOS API: access to queues or semaphores is forbidden through the normal functions, but FreeRTOS provides some specialized functions to be used in that context

- E.g., in an interrupt handler, a V() operation to a semaphore must be realized using xSemaphoreGiveFromISR() instead of xSemaphoreGive()

- The prototypes for these method can be different as they can involve some particular problems (this is the case of xSemaphoreGiveFromISR() which implements a mechanism to make the user to be aware that this give operation makes the interrupt to be preempted by a higher priority interrupt unlocked by this give operation).

- Configured in FreeRTOS with constants of

"FreeRTOSConfig.h":configKERNEL_INTERRUPT_PRIORITYsets the interrupt priority level for the tick interrupt.configMAX_SYSCALL_INTERRUPT_PRIORITYdefines the highest interrupt level available to interrupts that use interrupt-safe FreeRTOS API functions. If this constant is not defined, then any interrupt handler function that makes a use of FreeRTOS API must execute atconfigKERNEL_INTERRUPT_PRIORITY

- Any interrupt whose priority level is greater than

configMAX_SYSCALL_INTERRUPT_PRIORITYorconfigKERNEL_INTERRUPT_PRIORITYifconfigMAX_SYSCALL_INTERRUPT_PRIORITYis not defined, will never be preempted by the kernel, but are forbidden to use FreeRTOS API functions.

4.2 Manage interrupts using a binary semaphore

- It is necessary to make interrupts handlers’ execution as short as possible, on way to achieve this goal consists in the creation of tasks waiting for an interrupt to occur with a semaphore, and let this safer portion of code actually handle the interrupt.

{{freertos_int_sembin.png|image}}

*

* An ISR gives a semaphore and unblock a Handler task that is able to handler the ISR, making the ISR execution much shorter.

Example 12 - Semaphores and ISRs

- This example uses a binary semaphore to unblock a task from within an interrupt service routine effectively synchronizing the task with the interrupt.

- A simple periodic task is used to generate a software interrupt every 500 milliseconds.

- A software interrupt is used for convenience because of the difficulties in hooking into a real IRQ in a simulated environment.

4.3 Critical sections

- Sometimes a portion of code needs to be protected from any context change so as to prevent a calculation from being corrupted or an I/O operation being cut or mixed with another. FreeRTOS provides two mechanisms to protect some as small portions as possible; some protects from any context change, either from a scheduler operation, or an interrupt event, others only prevents scheduler from preempting the task.

- Handling this can be very important as many instructions, affectations for instance, may look atomic but require several hardware instructions (load variable address to a registry, load a value to another registry and move the value to the matching memory address using the two registries).

- Suspend interrupts

- This form or critical section is very efficient but must be kept as short as possible since it makes the whole system in such a state that any other portion of code cannot be executed. This can be a problem for a task to meet its time constraint, or an external event to be treated by an interruption.

/* Ensure access to the PORTA register cannot be interrupted by placing it within a critical section. Enter critical section. */ taskENTER_CRITICAL(); /* A switch to another task cannot occur between the call to taskENTER_CRITICAL() and the call to taskEXIT_CRITICAL(). Interrupts may still execute on FreeRTOS ports that allow interrupt nesting, but only interrupts whose priority is above the value assigned to the configMAX_SYSCALL_INTERRUPT_PRIORITY constant and those interrupts are not permitted to call FreeRTOS API functions. */ PORTA |= 0x01; /* We have finished accessing PORTA so can safely leave the critical section. */ taskEXIT_CRITICAL();

- A task can start a critical section with

taskENTER_CRITICAL()and stop it usingtaskEXIT_CRITICAL(). The system allow a critical section to be started while an other one is already opened: this makes much easier to call external functions that can need such a section whereas the calling function also need it. However, it is important to notice that in order to end a critical section,taskEXIT_CRITICAL()must be called exactly as much astaskSTART_CRITICALwas. Generaly speaking, these two functions must be called as close as possible in the code to make this section very short. - Such a critical section is not protected from interrupts which priority is greater than

configMAX_SYSCALL_INTERRUPT_PRIORITY(if defined in"FreeRTOSConfig.h"; if not, prefer to consider the valueconfigKERNEL_INTERRUPT_PRIORITYinstead) to create a context change. - Stop the scheduler

- A less drastic method to create a critical section consists in preventing any task from preempting it, but let interrupts to do their job. This goal can be achieve by preventing any task to leave the “Ready” state to “Running”, it can be understood as stopping the scheduler, or stopping all the tasks. Notice it is important that FreeRTOS API functions must not be called when the scheduler is stopped.

/* Write the string to stdout, suspending the scheduler as a method of mutual exclusion. */ vTaskSuspendAll(); { printf( "%s", pcString ); fflush( stdout ); } xTaskResumeAll();

- When calling

xTaskResumeAll()is called, it returnspdTRUEif no task requested a context change while scheduler was suspended and returnspdFALSEif there was.

5. Memory management

- In a small embedded system, using

malloc()andfree()to allocate memory for tasks, queues or semaphores can cause various problems: preemption while allocating some memory, memory allocation and free can be an nondeterministic operations, once compiled, they consume a lot of space or suffer from memory fragmentation. - Instead, FreeRTOS provides three different ways to allocate memory, each adapted to a different situation but all try to provide a solution adapted to small embedded systems. Once the proper situation identified, the programmer can choose the right memory management method once for all, for kernel activity included. It is possible to implement its own method, or use one of the three FreeRTOS proposes and which can be found in

"heap_1.c", "heap_2.c", "heap_3.c"

5.1 Prototypes

- All implementations respect the same allocation/free memory function prototypes. These prototypes stands in two functions:

void *pvPortMalloc( size_t xWantedSize); void pvPortFree( void *pv);

-

xWantedSizeis the size, in byte, to be allocated,pvis a pointer to the memory to be freed.pvPortMallocreturns a pointer to the memory allocated.

5.2 Memory allocated once for all

- It is possible in small embedded systems, to allocate all tasks, queues and semaphores, then start the scheduler and run the entire application, which will never have to reallocate free any of structures already allocated, or allocate some new. This extremely simplified case makes useless the use of a function to free memory: only

pvPortMallocis implemented. This implementation can be found in"Source/portable/MemMang/heap_1.c" - Since the use of this scheme suppose all memory is allocated before the application actually starts, and there will have no need to reallocate or free memory, FreeRTOS simply adds a task TCB (Task Control Block, the structure FreeRTOS uses to handle tasks) then all memory it needs, and repeat this job for all implemented tasks.

- This memory management allocates a simple array sized after the constant

configTOTAL_HEAP_SIZEin"FreeRTOSConfig.h", and divides it in smaller parts which are allocated for memory all tasks require. This makes the application to appear to consume a lot of memory, even before any memory allocation.

5.3 Constant sized and numbered memory

- application can require to allocate and deallocation dynamically memory. If in every tasks’ life cycle, number of variables and it’s size remains constant, then this second mechanism can be set up. Its implementation can be found in

"Source/portable/MemMang/heap_2.c". - As the previous strategy, FreeRTOS uses a large initial array, which size depends on

configTOTAL_HEAP_SIZEand makes the application to appears to consume huge RAM. A difference with the previous solution consists in an implementation of vPortFree(). As memory can be freed, the memory allocation is also adapted.- Let’s consider the big initial array to be allocated and freed in such a way that there are three consecutive free spaces available. First is 5 bytes, second is 25 and the last one is 100 bytes large. A call to

pvPortMalloc(20)requires 20 bytes to be free so has to reserve it and return back its reference. This algorithm will return the second free space, 25 bytes large and will keep the remaining 5 bytes for a later call to pvPortMalloc(). It will always choose the smallest free space where can fit the requested size.

- Such an algorithm can generate a lot of fragmentation in memory if allocations are not regular, but it fits if allocations remains constant in size and number.

5.4 Free memory allocation and deallocation

- This last strategy makes possible every manipulation, but suffers from the same drawbacks as using

malloc()andfree(): large compiled code or nondeterministic execution. This implementation wraps the two functions, but make them thread safe by suspending the scheduler while allocating or deallocating.

void *pvPortMalloc( size_t xWantedSize ) { void *pvReturn; vTaskSuspendAll(); { pvReturn = malloc( xWantedSize ); } xTaskResumeAll(); return pvReturn; } void vPortFree( void *pv ) { if( pv != NULL ) { vTaskSuspendAll(); { free( pv ); } xTaskResumeAll(); } }

dokuwiki\Exception\FatalException: Allowed memory size of 134217728 bytes exhausted (tried to allocate 4096 bytes)

An unforeseen error has occured. This is most likely a bug somewhere. It might be a problem in the authplain plugin.

More info has been written to the DokuWiki error log.